Drop user which objects are in different databases of PG Instance.

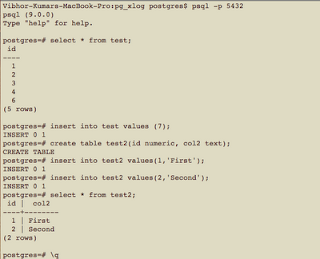

One of my colleague has asked about this. She was doing some testing and wanted to drop a user which has objects across the databases. While dropping user/role, DBA/Admin will get following error messages: postgres=# drop user test; ERROR: role "test" cannot be dropped because some objects depend on it DETAIL: 2 objects in database test Above error messages gives the sufficient information to Admin that there are some objects depend/Owned by the user exists in other database. For dropping such user, there are two methods: 1. Reassign all the objects owned by the user to some other user and then drop the user. Above is very useful, if employee, who left the company, has written some Procedure/objects, which is getting used in Application/process. Command Which can be are following: REASSIGN OWNED BY old_role to new_role; DROP USER old_role; Note:: reassign command need to be executed for all the databases under one PG instance. 2. First Drop all the objects own